Posted by Charlie Stross

http://www.antipope.org/charlie/blog-static/2025/12/barnums-law-of-ceos.html

It should be fairly obvious to anyone who's been paying attention to the tech news that many companies are pushing the adoption of "AI" (large language models) among their own employees--from software developers to management--and the push is coming from the top down, as C-suite executives order their staff to use AI, Or Else. But we know that LLMs reduce programmer productivity-- one major study showed that "developers believed that using AI tools helped them perform 20% faster -- but they actually worked 19% slower." (Source.)

Another recent study found that 87% of executives are using AI on the job, compared with just 27% of employees: "AI adoption varies by seniority, with 87% of executives using it on the job, compared with 57% of managers and 27% of employees. It also finds that executives are 45% more likely to use the technology on the job than Gen Zers, the youngest members of today's workforce and the first generation to have grown up with the internet.

"The findings are based on a survey of roughly 7,000 professionals age 18 and older who work in the US, the UK, Australia, Canada, Germany, and New Zealand. It was commissioned by HR software company Dayforce and conducted online from July 22 to August 6."

Why are executives pushing the use of new and highly questionable tools on their subordinates, even when they reduce productivity?

I speculate that to understand this disconnect, you need to look at what executives do.

Gordon Moore, long-time co-founder and CEO of Intel, explained how he saw the CEO's job in his book on management: a CEO is a tie-breaker. Effective enterprises delegate decision making to the lowest level possible, because obviously decisions should be made by the people most closely involved in the work. But if a dispute arises, for example between two business units disagreeing on which of two projects to assign scarce resources to, the two units need to consult a higher level management team about where their projects fit into the enterprise's priorities. Then the argument can be settled ... or not, in which case it propagates up through the layers of the management tree until it lands in the CEO's in-tray. At which point, the buck can no longer be passed on and someone (the CEO) has to make a ruling.

So a lot of a CEO's job, aside from leading on strategic policy, is to arbitrate between conflicting sides in an argument. They're a referee, or maybe a judge.

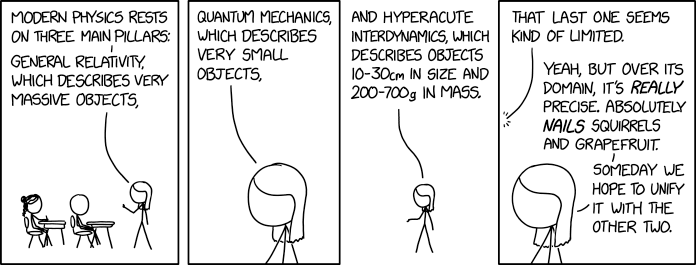

Now, today's LLMs are not intelligent. But they're very good at generating plausible-sounding arguments, because they're language models. If you ask an LLM a question it does not answer the question, but it uses its probabilistic model of language to generate something that closely resembles the semantic structure of an answer.

LLMs are effectively optimized for bamboozling CEOs into mistaking them for intelligent activity, rather than autocomplete on steroids. And so the corporate leaders extrapolate from their own experience to that of their employees, and assume that anyone not sprinkling magic AI pixie dust on their work is obviously a dirty slacker or a luddite.

(And this false optimization serves the purposes of the AI companies very well indeed because CEOs make the big ticket buying decisions, and internally all corporations ultimately turn out to be Stalinist command economies.)

Anyway, this is my hypothesis: we're seeing an insane push for LLM adoption in all lines of work, however inappropriate, because they directly exploit a cognitive bias to which senior management is vulnerable.

http://www.antipope.org/charlie/blog-static/2025/12/barnums-law-of-ceos.html